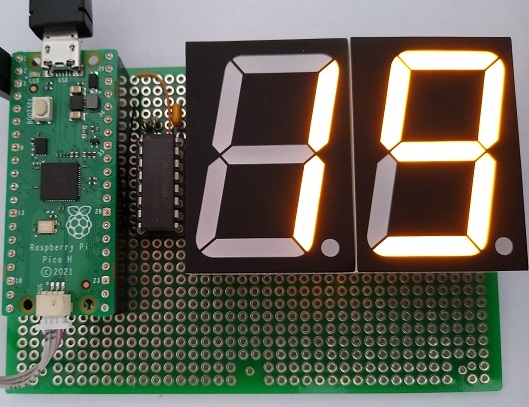

This project was prompted by the need for a circuit to provide a galvanically-isolated measurement of battery voltage. Existing designs tend to be complicated, expensive or both, so a new approach was adopted, that keeps the circuitry simple, and relies on the timing capability of a CPU. I’m using a Pi Pico RP2040, but almost any microcontroller would do, so long as it has a simple pulse-timing capability.

Why does the measurement have to be isolated? In my case, the equipment is in an environment with a lot of electrical noise, so good isolation is essential, but the technique has other advantages:

- Measurement of voltages that are floating with respect to ground

- Avoidance of any ground-loops

- Protection against transients on the lines being measured

- Simple hardware that can be implemented using surface-mount or robust through-hole devices

- Low-cost readily-available parts

- Negligible load-current when not measuring

The last point is quite important since in battery-powered systems, we don’t want the monitoring system to act as a continuous drain on the battery itself.

Operating method

A standard method for converting an analogue voltage to digital is the dual-slope ADC, whereby the time taken to charge a capacitor to the unknown voltage is compared with the time taken for a known ‘reference’ voltage; the ratio of the two indicates the value of the unknown voltage.

One obvious simplification would be to just use a single slope; measure the time taken to charge the capacitor to a known voltage, and since capacitor-charging follows a known exponential curve, a simple equation would yield the voltage value.

The big problem with this approach is the capacitor itself; there are many types of capacitor, but they all share the same characteristic to a greater-or-lesser extent: their capacitance value is not very accurately defined. If you look through a typical electronics catalogue, there aren’t many capacitors below 10% tolerance, and many are far worse than that. Then there is the issue of the capacitor value changing with temperature & age, and even in some cases, the applied voltage.

So it is important to minimise the effect of a change in capacitance, by basically comparing the time taken to charge up using the unknown voltage with the time taken to discharge from a known voltage. The charge & discharge times can easily be measured by a microcontroller, using digital signals that have been passed through an opto-coupler to provide isolation.

Shunt voltage reference

The TL431 is known as a ‘shunt voltage reference’ since its primary function is to clamp a voltage to a known value. It is also known as a ‘programmable zener diode’ which accounts for the surprising terminology, whereby the anode is the negative terminal, and the cathode is the positive terminal. The anode-cathode junction is essentially open-circuit until the voltage of the reference pin is around 2.5 volts (2.470 to 2.520 V according to the TL432A datasheet), when that junction will conduct. So in the above circuit diagram, if R2 and R3 are equal, and the input voltage is over 5V, then the output voltage will be clamped to 5 volts.

However the cathode line doesn’t necessarily have to go to the R1 R2 junction; in my design it goes to an optocoupler diode. This means that the opto will be energised when the gate pin reaches 2.5 volts. An additional side effect (not explicitly mentioned in the datasheet) is that the gate line is clamped to 2.5V; this becomes relevant when simulating the circuit.

Charge cycle

To satisfy the requirement for near-zero current when not measuring, a digital signal (through an opto-coupler) is used to start the measurement. I’ve used a FET opto-coupler, since its close-to-zero on-resistance is useful for simplifying the design, but a conventional Bipolar Junction Transistor (BJT) type could be used instead, taking into account the voltage drop across its emitter-collector junction.

Capacitor C1 is charged through R1, with resistors R2 & R3 acting as a load across the capacitor. Once the capacitor is fully charged, the voltage across it will be:

VCmax = VIN * (R2+R3) / (R1+R2+R3)

So if the input is 12V, the final voltage across C1 will be 9.717 volts. The standard value for measuring the charge-time of a capacitor is the ‘time constant’, which is the time taken to charge it to 63.2% of the final voltage, and if R2 and R3 weren’t there, this would be equal to C1 * R1, which is 4.7 milliseconds. The effect of C2 and C3 is to reduce the time-constant as if R2 + R3 were placed in parallel with R1, so the time-constant becomes:

TC = C1 * (R1 * (R2 + R3) / (R1 + (R2 + R3)))

This reduces the time-constant to 3.806 ms. The equation for the voltage across C1 is:

V = VS * (1 - exp(-T / TC))

Where:

VS is supply voltage

T is time in seconds

TC is time-constant (C * R)

It follows that the equation to calculate the time to reach a given voltage is:

T = -log(1 - (V / VS)) * TC

The way we’ll measure charge-time is start a clock when the opto-coupler is energised, and stop that clock when the TL431 device switches on; since R2 has the same value as R3, the switch-on will happen when the voltage across C1 is 5V. So the time to switch on for a 12V supply will be:

-log(1 - (5 / VCmax)) * TC = 0.002751 seconds

Discharge cycle

Once the voltage across C1 has stabilised, the CPU can switch off the supply to the U1 optocoupler, and the voltage across C1 will decay; when it falls below 5V, the TL431 will switch off, switching off U2. So the discharge time is measured between U1 and U2 being switched off.

The discharge time would be quite easy to calculate were it not for the fact that the TL431 clamps the reference pin to 2.5 volts with respect to the anode, so the discharge isn’t a simple exponential curve.

The best way to calibrate the ADC is to use known supply voltages, and use the microcontroller to report the charge & discharge times, and the ratio between them, e.g.

Supply Charge Disch Ratio

20 1.784 16.682 0.1069

19 1.891 15.981 0.1183

18 2.013 15.254 0.1320

17 2.154 14.487 0.1487

16 2.318 13.662 0.1697

15 2.513 12.793 0.1964

14 2.746 11.854 0.2317

13 3.031 10.841 0.2796

12 3.391 9.740 0.3482

11 3.859 8.533 0.4522

10.5 4.144 7.864 0.5270

10 4.495 7.195 0.6247

9.5 4.897 6.448 0.7595

9 5.420 5.680 0.9542

8.5 6.046 4.842 1.2487

8 6.916 3.950 1.7509

7.5 8.100 2.977 2.7209

7 10.048 1.915 5.2470

The tests have been done in descending order, so the time ratios are in ascending order, which simplifies the process of converting a measured time ratio into a voltage using linear interpolation:

#define NUM_CAL_VALS 16

float cal_ratios[NUM_CAL_VALS] = { 0.1069, 0.1183, 0.1320, 0.1487,

0.1697, 0.1964, 0.2317, 0.2796, 0.3482, 0.4522,

0.5270, 0.6247, 0.7595, 0.9542, 1.2487, 1.7509 };

float cal_volts[NUM_CAL_VALS] = { 20, 19, 18, 17,

16, 15, 14, 13, 12, 11,

10.5, 10, 9.5, 9, 8.5, 8 };

float chg_t, dis_t; // Charge & discharge times

float val = dis_t > 0 ? chg_t / dis_t : 0;

volts = interp(val, cal_ratios, cal_volts, NUM_CAL_VALS);

// Return interpolated value

float interp(float val, float *invals, float *outvals, int size)

{

float diff, indiff, outdiff, ret = 0.0;

int i = 0;

while (i < size-1 && ret == 0.0)

{

if (invals[i+1] > val)

{

diff = val - invals[i];

indiff = invals[i + 1] - invals[i];

outdiff = outvals[i + 1] - outvals[i];

ret = outvals[i] + outdiff * diff / indiff;

}

i++;

}

return (ret);

}

Design changes

There is plenty of scope for changes or improvements to this design; for example, the current component values give a useful measurement range of 8 to 20 volts, which can be increased by changing R1, though it is necessary to bear in mind the ‘absolute maximum’ anode-cathode voltage of the TL431, which is 37 volts.

The capacitor value can be increased to give longer charge & discharge times, but the overall measurement time will increase as well, as the discharge cycle should only be started when the capacitor is fully charged. A suitable candidate would be a 10 uF tantalum device, but don’t use a wide-tolerance part such as an aluminium electrolytic, if you want the measurements to have long-term repeatability.

Copyright (c) Jeremy P Bentham 2023. Please credit this blog if you use the information or software in it.